In an article titled The Worst Programmer I Know, Dan North, the creator of behavior-driven development, writes about a nearly fired developer he saved from the unemployment line.

This developer consistently delivered zero story points, even though delivered story points was the primary metric for developer productivity at their (unnamed) software consultancy. North defends the developer from an imminent firing because, he writes, “Tim wasn’t delivering software; Tim was delivering a team that was delivering software.”

The debate about developer productivity has been raging for decades, but few high-level papers and frameworks capture the truth this short post does. Development work is complex; measuring it is a wicked problem; and executives focused on measurement alone are not to be trusted.

The debate is as much top-down as bottom-up. McKinsey, for example, recently proposed a developer productivity framework that the developer community severely criticized. And anyone from a junior developer to a CTO can go viral on Twitter at a moment’s notice if they claim that 10x developers exist.

The impossibility of the conversation points to an upstream problem. The debate won’t end with this post, but I hope it will give you a new perspective to consider—one that requires us to reconsider where this problem gets solved.

The developer productivity debate (and why it never ends)

The debate about developer productivity is never-ending.

Despite reams of research and countless team and company-wide attempts, there’s little consensus on what we should measure, how to use the results of those measurements, and whether measuring developer productivity is even possible. The debate won’t end until we can identify the core conflict.

Three broad parties drive the debates: Executives who think we must measure developer productivity, developers who think we shouldn’t measure developer productivity, and skeptics who think we can’t measure developer productivity.

Executives often feel driven to understand performance, especially in a macroeconomic environment that increasingly demands predictability and profitability. The closer development work is to the company’s profit center, the more they will want to understand how to boost and maintain productivity levels. Some executives want more control, and some executives just want more clarity, but inevitably, it seems, companies grow to a stage where executives need visibility into productivity.

Developers tend to intuit, contra either type of executive, that any developer productivity metric is too likely to lead to a draconian workflow. They know, too, that this eventuality means any given theory matters less than the managers wielding it.

You don’t have to imagine a mustache-twirling McKinsey consultant to picture a bad scenario, either. For example, a well-meaning manager applying a metric that works for an SRE team to a frontend team will cause chaos.

Skeptics tend to take a higher-level perspective than developers. Instead of pushing away metrics with fear, skeptics push away metrics with disdain.

Some skeptics argue that development work is too nuanced and too interdependent to be measurable. Some say that, to the extent developer productivity can be measured, any metrics would result in unintended consequences or misaligned incentives (lines of code, for example, could lead to a lot of junk code and tech debt).

These groups are broad and generalized, but they illustrate the dynamic at play, the dynamic that ensures this debate won’t end.

The theories and the realities

The developer productivity debate reignites every time a new theory claims to satisfy all parties.

Some theories are more nuanced than others, but many fail to satisfy developers or skeptics because, in practice, executives tend to wield (at best) blunt versions of the metrics these theories propose. The devil, as always, is in the implementation.

The most recent discourse came about because McKinsey proposed a developer productivity framework that included specialized McKinsey metrics. In response, Gergely Orosz and Kent Beck wrote a criticism of McKinsey’s work while proposing their own framework.

The authors captured a concern many developers likely felt while reading McKinsey’s proposal. They write, “The reality is engineering leaders cannot avoid the question of how to measure developer productivity today. If they try to, they risk the CEO or CFO turning instead to McKinsey, who will bring their custom framework.”

Before this current debate (but still relevant to the wider discourse) are a few major well-researched frameworks, including DORA, SPACE, and DevEx.

All three of these frameworks emerged from models that were much less nuanced and much more ad hoc. From the early days, countless managers have tried to measure developers by, for example, lines of codes and numbers of commits. The bottom-up frustration with these half-measures and their consequences inspired much of this research.

And yet, despite all this research, it’s the half-measures that dominate much of developers’ actual experiences. In reality, attempts to measure developer productivity tend to suffer from over-generalization or isolation.

When managers over-generalize developer productivity metrics, they lose meaning as they stretch metrics across different contexts. Engineers might measure their work by features shipped; SREs might measure their work via overall system reliability; and project managers might index on business impact. Even valid measures applied across different contexts become misleading.

Job categories only scratch the surface. IEEE research, for example, breaks developers down into six categories of preferred working styles: Social developers, lone developers, focused developers, balanced developers, leading developers, and goal-oriented developers. A metric that might motivate a social developer could crush a focused developer.

Metrics can also be too narrow and isolated, and the results they point to can be more narrow still. For example, a manager measuring productivity by lines of code committed misses that deleting code is often more valuable than writing it.

Sometimes, even if a manager correctly measures a productivity dip, the results of that measurement don’t lead to insight. A team might be working slowly for many reasons, but the solutions aren’t always obvious. Platform teams, for example, can build development tools and workflows that make other developers vastly more productive. Similarly, new tools (such as AI coding tools) can improve the development experience or make developers more efficient.

Where productivity measurement should happen to drive outcomes, not inputs

The productivity debate is endless because we have parties with different motivations that can’t agree and endless fuel from frameworks that propose The New Way. The lack of progress despite the preponderance of work indicates a bottleneck, an upstream problem that’s making downstream effort unproductive.

I believe if we flip the debate on its head, we can actually make it solvable. The flip requires two changes:

Instead of considering developer productivity a leading indicator, we should consider it a lagging indicator.

Instead of using the results of productivity metrics as insights unto themselves, we should use them as cues for investigation.

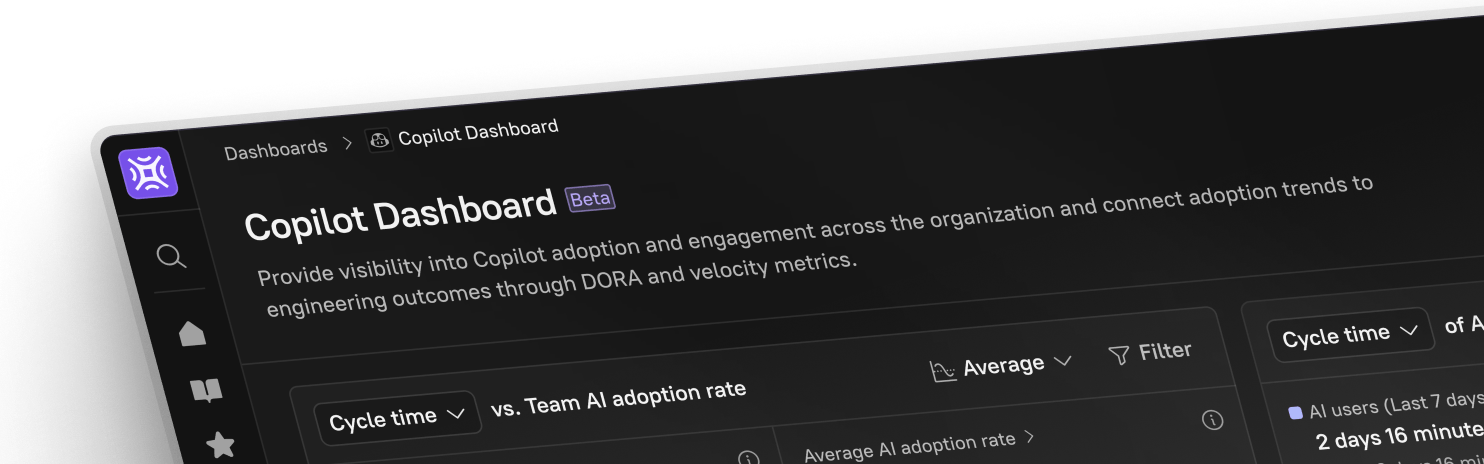

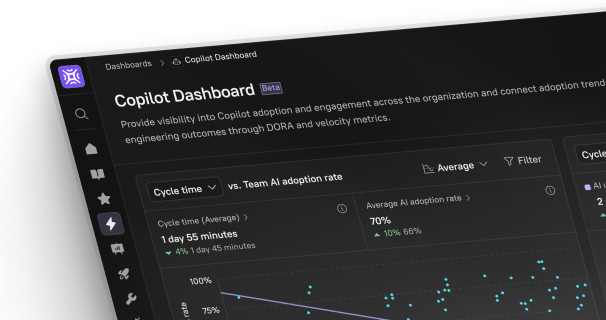

The only way to enable this shift is by positioning engineering metrics alongside two things: One, context that enables you to carry out deeper investigations, and two, capabilities that let you actually drive meaningful change. To be more direct—and maybe you saw where this was going—engineering metrics work best when they’re inside an Internal Developer Portal.

Take deploy frequency as an example. Without context, deploy frequency can be misleading. If deploy frequency dips, we have lots of questions but few answers.

Is the development team competent but untrained? Are they skilled but lack direction? Are the development tools broken or inefficient? Should a particular team you’re looking at even be measured this way (e.g., a team working on a large, tier 0 service)?

In a context-rich IDP, however, the deploy frequency metric can be fruitful. With metrics measured over time, you might see that a given team deployed much more quickly last quarter than this quarter. Now, you have something to investigate. With metrics across teams, you might notice that one team is deploying much more slowly than another. Now, you have something to compare. And with information about when teammates joined, other programs they’ve been assigned, and software that they own, now, you have something to consider.

Centralizing key engineering metrics within your IDP enables you to access the critical context you need to investigate, diagnose, and, most importantly—provide teams with curated paths to improvement that don’t gloss over nuanced differences in capability or competing priorities.

Once we reframe these metrics as lagging indicators of output rather than outcomes, we can treat them as cues and signals that inform the improvement programs we create (or extra headcount that we request) – not as triggers for any action in particular (much less punishment). Productivity is raw data; we need to turn it into meaningful insight before doing anything.

What do you think?