Steve Jobs once said that giving customers what they wanted wasn’t his approach. “Our job is to figure out what they're going to want before they do,” he said instead. “People don't know what they want until you show it to them. Our task is to read things that are not yet on the page.”

By now, this wisdom has become canonical for companies targeting end-users, but we tend to ignore it when we’re building products and processes for professional users. Often, we invert the wisdom and give professionals solutions that only appear to solve their problems.

This is nowhere more true than in developer experience, tools, and productivity, where leaders assume the developer experience is all about freeing developers to spin up services whenever and however they want.

At scale, however, enabling this kind of self-service without helping developers understand the context of the system they work in can make the developer experience worse than when you started. The developer experience starts before developers even begin configuring a new service, and if developers can’t access context, self-service can lead to complexity costs that outweigh the experience leaders were trying to improve.

Echoing Jobs, the manager's job isn’t to give people what they ask for but to read between the lines, look at the needs beneath the wants, and solve problems in a lasting way that works for the entire team. To improve the developer experience, leaders first have to lay the necessary foundation and ensure developers can access information before they spin up anything new.

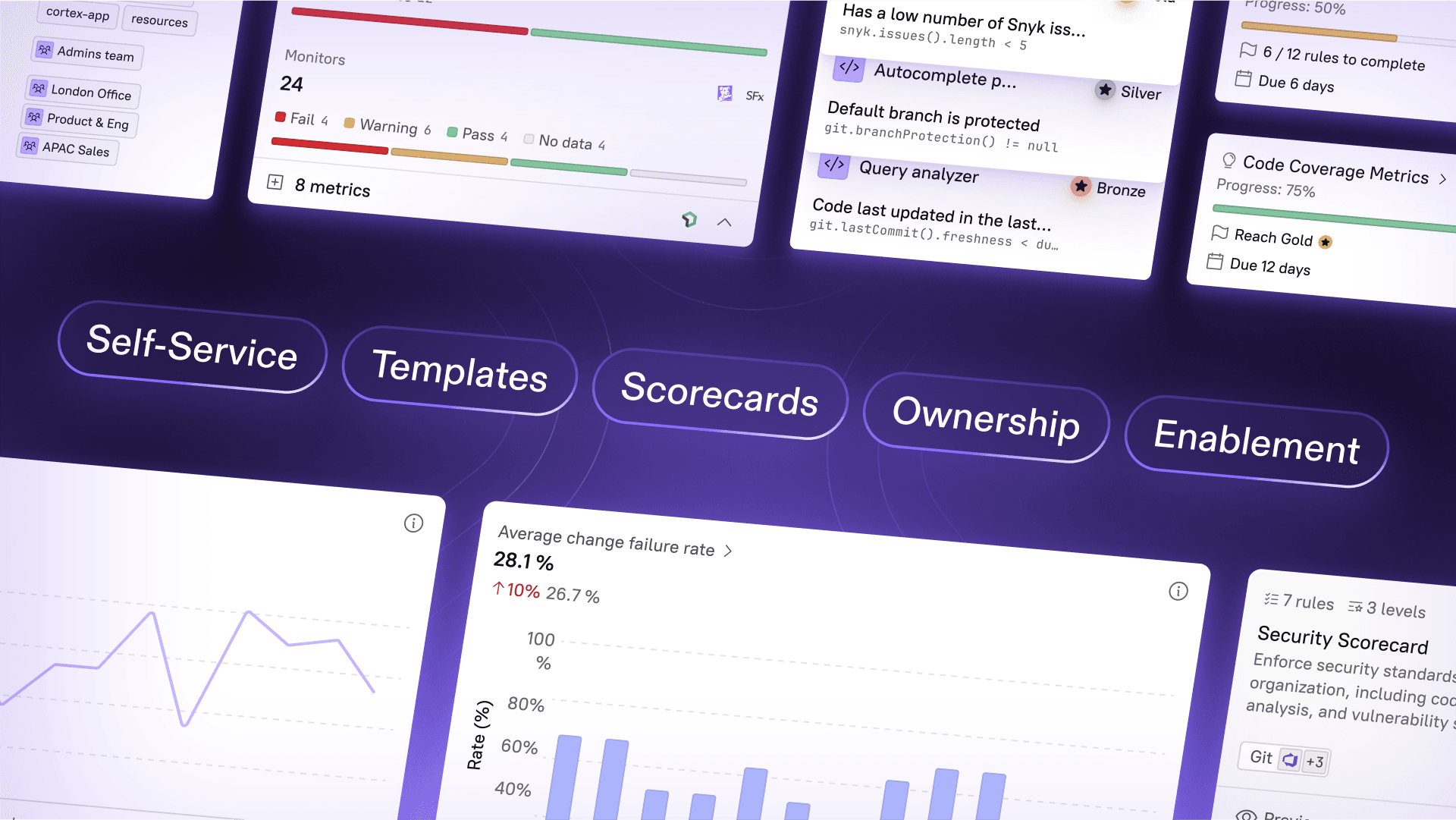

Developer self-service starts with access to information

Technical leaders often think they’re doing their developers a favor by making every tool and configuration self-service. Developers know the development experience best, the thinking goes, so we should empower them. But this kind of self-service, at scale, can be a trap.

The trick is that self-service, which can mean a lot of different things, often tends to be reduced to its simplest and bluntest form when technical leaders use it as a shortcut to improving the developer experience. In reality, the bulk of these self-service options don’t work well unless you also make information access self-service.

Self-service is when leaders give developers full or nearly full access to all the tools they need (or think they’ll need) to do their work, including infrastructure, version control, configuration management, and more. Even more broadly, self-service can also refer to teams that allow their developers to purchase and adopt new products and open-source packages with minimal oversight.

Of course, the philosophy behind enabling self-service makes sense. Enabling developers to work as free of friction as possible is good, generally speaking.

The trouble is that self-service can make it too easy to spin up new services, add new projects, and adopt new tools. It reduces friction, but over time and across teams, it increases complexity. Technical leaders and developers alike tend to underestimate how bad this complexity can get because the complexity is an emergent, secondary consequence of self-service.

A development team is ultimately more than the sum of its parts, and that can be a good or bad thing. A development team full of people working in sync, leaning on each other, and complementing their skill sets can reach far. A development team full of people adding new services and tweaking systems as they see fit can soon sink under complexity.

Without self-service information access supporting the rest of your self-service options, developers are operating in the dark.

The costs of complexity without context

Without a way to track complexity as developers take advantage of self-service, development teams can be swamped by the costs of complexity—often without realizing that unmonitored self-service is the cause.

1. Cognitive overload becomes overwhelming

When complexity rises, developers have to juggle more tasks and maintain context on more processes. Over time, this degrades the developer experience.

The logic here is simple: One developer spinning up one new service is rarely a burden. But across teams, organizational efforts, and months, quarters, and years, every addition can contribute to a system that gets deeply complex.

Eventually, no one on a given team will have the necessary information about all the services in their purview, let alone information about other services across teams.

The damage this complexity does to productivity is well-documented. 2024 GitHub research shows, for example, that “developers who report a high degree of understanding of their code feel 42% more productive than those with low or no understanding.”

Onboarding new developers, which is already challenging in ideal circumstances, gets even more difficult. New developers will struggle to understand the complexity, and their work to understand the system will be slow and painful if much of the complexity comes from one-off decisions made ages ago.

The more services and components in action, the more cognitive overhead developers have to deal with. If there’s one service, for example, a team can likely maintain it easily. But if there are dozens of services, answering even simple questions becomes difficult.

“Did somebody deploy [SERVICE] recently?” It’s likely hard to say. “Where is [SERVICE] running?” The person who last touched the service might not even know.

These questions don’t seem burdensome on a one-off basis. But as they accumulate, productivity and morale can both fall. 2023 StackOverflow research shows, for example, that nearly two-thirds of developers spend at least 30 minutes per day searching for answers and solutions.

This finding is echoed in our 2024 State of Developer Productivity research. According to our respondents, developers' time spent gathering project context and waiting on approvals were the two biggest productivity leaks.

This finding is echoed in our 2024 State of Developer Productivity research. According to our respondents, developers' time spent gathering project context and waiting on approvals were the two biggest productivity leaks.

90% of our respondents also said that improving productivity is a top initiative of theirs, which points to the severity of the information access problem. Until developers don’t have to search for answers, gather project context, and wait for approvals every day, productivity will keep draining.

2. Day-to-day operations remain constant distractions

When complexity grows, developers can lose time and focus as they repeatedly shift to non-creative tasks that take up more time and effort than they should.

Even the simplest of tasks can become onerous. Take committing code, for example. That should be as simple as it gets, right? But in an overly complex environment, even that can become annoying.

Instead of simply doing the thing, you’re asking questions:

Does the repository you want to deploy from use the team’s legacy Jenkins setup?

Does the application in question use our modern GitHub Actions pipeline or our deprecated pipeline?

Does this particular service need a different deployment process because it’s a different criticality?

The less standardized your systems are, the worse this complexity gets. Developers can end up stuck in a cycle of repeatedly trying to regain context as they try to figure out whether to deploy a service using, for example, Argo CD, Kubernetes, or a homegrown pipeline.

All of this amounts to wasted time. DX research shows this is a common problem, with a 2024 study finding that “On average, developers report 22% of their time being wasted.”

Developers want to build, but they’re stuck asking questions, checking internal docs, and reworking work that should have already been done.

3. KTLO work takes over coding work

Keeping the lights on (KTLO) work is necessary but rarely fulfilling. When complexity is left unchecked, KTLO work grows and gets harder to complete.

Famously, developers ostensibly hired to program and code tend to spend much of their time on non-coding activities. Meetings take up a chunk of this time, but much of it goes to KTLO work – tasks that are technical but aren’t creative or of much value to the business.

KTLO work is primarily about maintenance – ensuring that the infrastructure and apps that the team has already deployed keep working as intended. Week to week, this can include some sizable tasks, such as squashing bugs, but it can also include a range of system tweaks that Google (and others) have taken to calling toil.

According to 2020 research by Vanson Bourne, a technology research agency, 77% of technical leaders believe that spending too much time on KTLO work is a significant obstacle for their organizations. Similarly, 89% believe that their organizations should be prioritizing innovation instead.

KTLO work is a moment when business needs, and developer wants are in line: Businesses would prefer developers spend more time on value-added work, and developers prefer creative, engaging work. One of the best ways to boost both productivity and experience, then, is to find ways to reduce KTLO work and increase coding work.

There are as many ways to reduce KTLO work as types of KTLO tasks (which is, to say, many), but one warrants emphasis: Incidents and mean time to recovery (or MTTR).

Beyond the obvious reasons development teams would want to minimize the time services are down, lowering MTTR also results in less KTLO work and more coding work. When systems are complex, however, they’re more likely to fail and harder to diagnose and repair.

If teams can’t easily access information about the services that are down (or the services that are dependent on them), they have to research before they can act. If, for example, they suddenly need to find out who owns which service, where it lives, who deployed it recently, and more, then MTTR will lengthen, and more developers will have to be pulled in to help.

Create signal before enabling noise

The more complex a system gets, the harder it is to find a signal within the noise.

Known risks, such as a lengthy MTTR, are scary, but unknown unknowns are even scarier. The more complex your system, the more you risk not even knowing what you don’t know.

With small companies and small teams, you can generally rely on everyone having context on almost everything. But when companies and teams scale, maintaining context in this kind of casual fashion is virtually impossible.

Instead of building a different way of maintaining context and containing complexity, however, most companies either default to bureaucracy (think of the traditional companies that tend to treat development as a cost center and route every question through IT) or chase self-service.

For startups, especially, self-service feels better than bureaucracy, but few realize they’re just signing up for complexity on delay. Eventually, heedless self-service results in complexity that emerges too slowly for anyone to fully notice how bad it’s getting.

Ultimately, the incentives aren’t aligned: Individual developers will want to use self-service to benefit their individual workflows, and they won’t have the context to know whether a benefit to one will contribute to a death-by-a-thousand-cuts effect for the rest of the team.

The core issue is that teams tend to think about self-service too late in the development lifecycle. If teams think about self-service as only being a way to ship faster, then they’re going to optimize processes that quicken shipping at the cost of processes that make maintenance difficult.

Instead, we recommend thinking about self-service earlier in the lifecycle and focusing on how to provide developers with better, more complete information before creating more services.

Self-service isn’t bad, but it’s risky to enable too much self-service before building a system that captures the tradeoffs of system complexity, writ large, and the more granular tradeoffs of every tool and process developers adopt. This is why we consider self-service information access the foundation of a great developer experience.

By allowing developers to get immediate context on the services already in action (including who deployed it, where, why, etc.), they can make informed decisions about what they do next. Some problems, such as duplicating and repeating services, solve themselves (or never come up in the first place). With context, the incentives change, too – developers don’t want to contribute to complexity, but they rarely know they’re doing so without this kind of information access.

Once information becomes self-serve, development teams can reduce ramp time and ensure they know where to start looking when something breaks. Over time, this increased visibility leads to system-wide improvements as developers make micro-investments in infrastructure as necessary and keep complexity in check.

Information is the essential ingredient to developer experience

A good cake requires a whole range of ingredients, including flour, eggs, sugar, salt, and all the flavorings that make it special. You can buy the best version of each ingredient, but if you’re missing even one, the cake will be disgusting.

Developer experience is similar. Many well-intentioned teams are trying to make cakes with flour, sugar, and salt and are ending up with complex, poorly-tasting messes.

This is why we consider self-serve information access so important. In this metaphor, information access isn’t a frosting that adds to the experience but a necessary ingredient that allows the rest of the cake to come together.

When developers spin up new services and tweak configurations without access to contextual information about systems (and their histories), complexity is likely. At scale, it’s inevitable.

With an Internal Developer Portal or IDP, you can add the missing ingredient – information – and ensure that developers make informed self-service choices. The data bears this out with our research showing that 48% of non-IDP users consider the time it takes to find context a primary impediment to productivity.

With an IDP, developers always know who owns which software and can ensure, especially over time, that no services get orphaned and that everyone knows who to ask for more information about each and every service. Over time, self-service information access—supporting other self-service capabilities—leads to a much healthier system unburdened by unnecessary complexity.

If leaders want to improve the developer experience – and the health of their systems along the way – they have to start earlier in the process and prioritize self-service information access.