Reliability metrics in software development are metrics that help teams quantify how dependable and consistent their software systems are over time. By converting a wide range of technical properties into hard data, these provide quantifiable information to understand the probability of software running failure-free in a given environment over time. These metrics are a subset of developer-focused key performance indicators (KPIs), data that is gathered to emphasize developers' output. Here the DORA framework is the most commonly-used, originally focusing on two velocity and two stability metrics before adding a reliability metric in 2021. The growing importance of reliability as a metric of developer performance coincides with greater prominence of cloud environments, and the complexity that comes with this.

These numbers provide insights into considerations such as system uptime, error rates, response times, and the frequency of failures. Understanding and tracking reliability metrics allows developers to identify areas in need of improvement, ensuring that the software meets user expectations for performance and availability, and aligns with the organization's quality standards.

Users are being recognized more as stakeholders in reliability. What was once an inside baseball approach of technical developers applying technical standards to their peers, now incorporates reliability as defined by users' expectations. This grounds reliability in delivery at the level of the user rather than just the team, which means there is genuine skin in the game for developers. Robust systems, happy users and productive develops makes for a successful business, and reliability metrics can contribute to all of these.

This blog will focus on the different reliability metrics that you can implement, what you should be aiming to gain from using these metrics and some best practice advice on implementation.

What are reliability metrics?

One technical definition of software reliability is the probability of failure-free operation of a computer program in a specified environment for a specified time. In other words, the likelihood that everything is working as expected. By converting a wide range of technical properties into hard data, reliability metrics provide quantifiable information to understand how and why we can expect software to run without failure, and where to look when we don't meet the standards that we set.

These metrics are similar to Site Reliability Engineering (SRE) metrics in that both quantify the stability, performance and health of software applications. While SRE KPIs take a broader scope, reliability KPIs are focused more on system failure. For example, SRE metrics include Service Level Indicators (SLIs) that define expected level of service and Service Level Objectives (SLOs) that derive from SLIs and quantify aspects of service performance.

Reliability KPIs are often downstream of SRE, such as Service Level Agreements (SLAs) based on SLOs and given to customers to define the expected reliability of services that they are using. They also include data focused on system reliability, tracking downtime and period of time between breakdowns. SRE applies a magnifying glass to reliability in a broad context, from customer experience and customer satisfaction to maintainability across different operating conditions. Reliability metrics apply a microscope to software reliability for detailed data on number of failures, outages in a given time and availability.

Metrics are only useful like these are only as useful as their application, and treating them as abstractions is a good way to fall prey to Goodhart’s Law. A fun method of reliability testing is chaos engineering, a system of using controlled experiments to identify failure modes. This involves inserting faults into systems in real-time to surface vulnerabilities and induce failures. It’s distinct from traditional testing, which focuses on verifying that systems work as expected, because chaos engineering should identify unknown unknowns and reduce Knightian uncertainty.

By quantifying the dependability and stability of software systems, reliability metrics help developers identify and address potential issues before they impact end-users. When applied across the lifecycle of applications, best practice software reliability dramatically reduces the cost of updates and outages after software has shipped.

Some of the most commonly used KPIs for reliability are fairly self-explanatory and focus on the operational time an application is available, the average time between failures and the repair time it takes to recover from an outage. These include uptime, mean time between failures and mean time to recover.

Let’s look at some of these in greater detail and include some examples.

Examples of reliability metrics

Uptime percentage: measures reliability by dividing the total time that a system is operational, or available, by time over a given period. So if a system was available for 950 hours over a 1000 hour period, the uptime percentage would be 95%. You want this to be measured with nines, with 99.999% uptime considered the gold standard.

Mean time between failures (MTBF): is used to predict the time between two given failures in a system. It's calculated by dividing the total operating time by the total number of failures, so if your application crashed twice in a 1000 hour period your mtbf will be 500 hours.

Mean time to failure (MTTF): like MTBF this tracks the failure rate within a system, but where MTBF looks more at regular but unscheduled repairs, MTTF is used to replace the system as a whole. If you owned a motorcycle then the MTBF would be time between unscheduled checks on tires and oil, whereas MTTF would be the time before the engine needs to be replaced.

Mean time to repair (MTTR): the time it takes to fix a system a system. It is calculated by dividing the total time spent on repairs by the number of repairs, and includes testing time as well as repairs. So, if it takes two, four, and six hours to fix three different bugs, the MTTR for the system is hours.

Failure rate: indicates how frequently an engineered system or component fails. It's often calculated by dividing the number of failures by the total time observed. For example, if your software encountered 10 failures over 1000 hours, the failure rate is 0.01 per hour.

Service level agreement (SLA) compliance: indicates how well you’re meeting the service levels that you promised your customers. Your contract will typically provide parameters by which incidents should be resolved (time, cost, prioritization etc). This metric, expressed as a ratio or a percentage, considers the number of incidents resolved in compliance with the SLA relative to the total number of incidents.

Reliability growth: this tracks improvement to a system’s reliability over time. Reliability growth can be calculated different ways, and charts systems becoming more robust. Any of the above metrics can be used for this metric, such as increased uptime or MTBF, or higher levels of SLA compliance.

How to choose the right reliability metrics

Reliability metrics can identify vulnerabilities and pain points in your system, measure the impact of technical changes and ultimately improve user experience. They offer quantitative data on software reliability: that everything is working as it should be. They need to align with developer experience and company culture, and there are several considerations to getting them right.

Relevance to business goals

Metrics are only as valuable as their impact on business objectives. For an online retailer offering differentiated products, 99.9% uptime might be a perfectly acceptable target, but a high-frequency trader would most likely want to go several standard deviations higher. For startups reliability growth might be more important than mean time between failures, but established brands with reputations for quality might focus more on current performance.

User-centricity

As engineers we can often fall into the trap of considering problems from a technical perspective, and forget the importance of the user. Considering reliability from the perspective of the user informs our choice of metrics and helps ensure our improvements translate to brand value and improve the bottom line.

Measurability and quantifiability

While we like a mix of quantitative and qualitative metrics for things like developer experience and productivity, when it comes to Reliability metrics quantitative is king. Failures and vulnerabilities should be clearly defined, with time intervals closely tracked and yielding clear observability.

Actionability

These quantitative metrics should be chosen for their ability to drive actionable improvements. There’s no point tracking the rate of occurrence of failure if you don’t have a means to fix the problem. Reliability metrics should be used with a bias towards action.

Completeness and coverage

The metrics that you choose should cover reliability across the entire IT estate. There is no value in optimizing reliability and minimizing errors in some assets and not others. Metrics need to consider potential failures across all time frames and all types of software.

Benchmarking

By using clear quantitative data, reliability metrics are well-suited to benchmarking against industry standards or competition. Provided you are ultimately guided by business goals rather than overly-focusing on third parties, benchmarking can be an excellent way to drive reliability standards.

Benefits of tracking reliability metrics

When done correctly, reliability KPIs should drive standards and improvement for both individual developers and the larger business. It can drive higher standards in code quality and a more strategic approach to software development for individuals, while improving cost efficiency and business continuity across the enterprise.

This includes:

Early issue detection: using reliability metrics well means building an operational culture that surfaces issues quickly and deals with them before they cause significant disruption.

Improved user satisfaction: good reliability leads to a trusted relationship with users and this dependability can act as a source of competitive advantage.

Enhanced business reputation: system failures are public knowledge in today’s connected world, and consistent reliability builds a healthy reputation among suppliers, partners and users.

Cost savings: getting reliability right means solving problems quickly, often before they metastasize into bigger and more expensive failures. Prevention is better than cure, and reliability is the best form of prevention.

Data-driven decision-making: the quantitative nature of reliability makes it ideal for data-driven decisions. By placing this at the heart of enterprise IT, it supports a culture of applying data to meet business objectives that can be useful beyond developers and maintenance teams.

Competitive advantage: all of these factors can combine to build a competitive advantage rooted in dependability and robust operations.

Best practice for implementing reliability metrics and using them for continuous improvement

While the basis for reliability data is quantitative, there is still a lot of subjective consideration needed to implement them effectively. Tracking and benchmarking these metrics should be done expressly to drive continuous improvement, and should be built into the software development culture.

There are challenges around ensuring high quality data, securing buy-in and driving improvement at scale. Getting this right means emphasizing data collection, clear communication on goals and scalability across the organization.

Some best practice considerations include:

Define clear objectives

Before rushing around to gather data, consider what good looks like in software reliability. Do you want to reduce downtime costs at the back end or improve user experience at the front end? Reliability looks different depending on the organization and market, and getting it right starts with clear objectives.

Select relevant metrics

Not all reliability metrics will be relevant to every system or objective. Select metrics that align with your objectives and provide meaningful insights into your system's reliability.

Implement monitoring tools

Data gathering is a crucial aspect of tracking reliability, and your monitoring needs to be reliable for it to work. Getting tooling right is crucial, and you should look for tools that integrate well with your current systems. Try application performance monitoring tools, log analyzers and incident management platforms as a starting point.

Analyze and interpret data

Once you’re clear on what you want and you’ve got the monitoring in place, you need to get to work analyzing your outputs to derive insights. Remember that context is crucial here, and you should always dig into the second-order effects when considering any data points.

Iterative improvement process

Armed with clear quantitative insights from impeccable data, it’s time for the most important step of all: implementing an iterative improvement process. Ground changes in objectives, ensure quantitative data forms the basis for your improvements and resist the urge to make it a project. This needs to be a process of continuous improvement.

Software reliability is a culture and a process, not a state. To learn more about implementing this culture, check out this webinar.

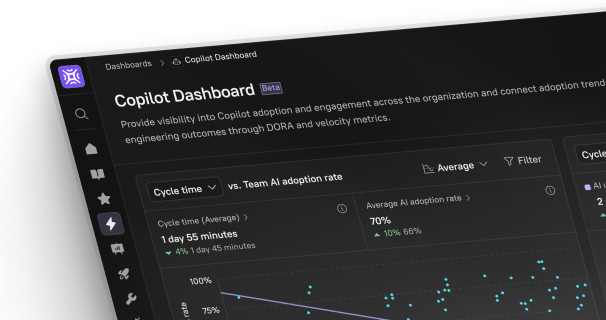

How Cortex can help

Cortex offers an Internal Developer Portal (IDP) that can do the heavy lifting around tracking and analyzing reliability metrics. This acts as a repository for reliability metrics and documentation related to reliability. By integrating it into the platform used for developer collaboration, Cortex can give reliability metrics the prominence and emphasis that they require within the engineering team.

Cortex’s integrations and plug-ins give you access to real-time data that can inform your reliability metrics. These can allow you to build and embed apps directly into the IDP that can draw data from a wide range of internal and third party data sources.

This data can be presented and analyzed using Eng Intelligence, which centralizes all data and gives you a one-stop shop for reliability. This can also extend to using Scorecards, quickly tracking progress and identifying bottlenecks at the level of individuals, teams and the organization as a whole.

Finally, Cortex can help develop a culture of reliability by embedding the relevant KPIs in a custom Developer Homepage. By providing real-time data in an accessible location and in the correct context, Cortex can help you achieve best practice reliability metrics quickly and effectively. To get started on this journey book a demo today.