Containerized microservices have been the gold standard for cloud computing since they replaced the monolith architecture over a decade ago. The flexibility, scalability, and velocity they enable for teams make them an obvious choice. Yet, a strict interpretation of one service for one function doesn’t quite serve everyone, especially when architectures get large. We’ll discuss how flexibility in service architecture might be the way to go.

Before microservices, the monolith

Although we won’t go into much detail here about monoliths, it’s hard to talk about microservices without acknowledging their origin story. Microservices emerged as a response to the limitations of monolithic architectures, which were the industry norm through the 2000s. Monoliths are convenient, especially for lower-traffic applications, because everything happens in shared memory (at the expense of performance). However, by the 2010s, with the rise of cloud computing and an increase in online traffic, the microservice architecture quickly grew in popularity. The publication of the 12-Factors by Heroku gave the industry a framework to follow, helping to drive widespread adoption of the pattern. Instead of cramming the whole application into one giant service, teams split their applications into smaller, more manageable microservices.

What are microservices?

A microservice architecture structures an application as a collection of small, independent services, which should have a clear, tightly-scoped functionality or represent a specific business task. For example, if you're hosting a web application for an e-commerce site, you might have a set of individual microservices for user management, payment services, notifications, inventory, and recommendations among others.

Each microservice has its own database and shouldn’t access the databases of other services, and they communicate with other microservices through well-defined APIs, typically over HTTP. A microservice is deployed separately from other services and generally decoupled from the client. “Deployed separately” is key; if you’re looking at a single repo but it’s deployed together with other services, it may be a macroservice or simply a monolith divided into modules. Learn about the differences between microservices and monoliths here.

Benefits of microservices

Microservices solved some of the problems of monoliths, especially around scalability and velocity. Some of these benefits are:

Portability

Portability is one of the most important benefits of microservices, and one of the top reasons teams choose to use them. Containers, like Dockers or Kubernetes, package an application, its dependencies, and other necessary components into a standardized unit known. Not all microservices run in containers, but it’s extremely useful to structure them that way, particularly for businesses with hybrid or multi-cloud solutions. Containers provide a consistent and isolated environment for running applications, so you run containerized microservices on any cloud provider (Google, AWS, Azure, etc.) without having to adapt or change the service.

Velocity

One of the great benefits of a microservices architecture is the increase in velocity, both in development and deployment speed. Decoupled and deployable services enable engineers to launch features and experiment with new ideas without shared dependencies or concerns about a large code base. They’re also easier to deploy and roll back than the monolith, as you’re deploying smaller binaries to a fewer number of machines.

Scalability

Microservices architecture provides scalability by allowing individual services to be scaled independently based on demand. Only the services experiencing high traffic need to be scaled, rather than scaling the entire application. As a result, organizations can efficiently allocate resources and handle fluctuations in workload without over-provisioning or underutilizing infrastructure. With monoliths, essentially the only option is to scale vertically (i.e., using bigger and better hardware), or to statically scale horizontally which will almost always waste resources. Container microservices allow for dynamic horizontal scale by adding new container instances as needed.

Fault isolation and reliability

Microservices promote fault isolation by encapsulating functionality within independent services. If a service fails or encounters issues, it’s not taking down the entire application with it. Instead, failures are contained within the affected service, allowing other services to continue operating normally (downstream effects aside), resulting in an overall more reliable system.

Ownership

As each microservice is responsible for a specific business function or feature, teams have clear ownership and accountability for their services. This encourages teams to take ownership of the entire development lifecycle, from design and development to testing and maintenance, saving other engineers from learning entire new parts of the codebase.

Flexibility in language and frameworks

Microservices do not share dependency libraries with other services, which means engineers have the freedom to choose the best languages for the services they’re writing, allowing for additional flexibility and speed in development.

Microservices gone wrong

When it comes to architectural choices, there are always going to be tradeoffs. Some of the aspects that make microservices helpful also can cause issues.

As the number of microservices grows, so too does the effort required to deploy, test, monitor, and maintain them. Managing a large number of services requires additional infrastructure, tooling, and operational overhead, and the cost of maintaining the entire infrastructure grows. If this scaffolding is in place, spinning up an individual service may be easy, but congratulations, you’re now on call for a new service.

With a large number of services, the number of calls to service dependencies to complete a request grows rapidly as well, so latencies can start to compound. If you call a number of downstream services, you have to deal with the case when one or more calls fail, the workaround for which can look similar to a monolith-style check if the transaction finished successfully. The call graph gets complicated so parsing the debugging logs and finding where a failure occurs is tedious to say the least.

Uber is a case study in microservices gone awry. Uber moved to a microservices architecture around 2012, when they had outgrown their two monolith services. By 2018, they had a system of over 2,200 microservices that was causing them various problems. Deploying a new service had become difficult and tracing an API call was complicated. It was no longer practical.

Some of the other issues that arise with microservices can’t be blamed on the philosophy, but are instead the result of user error. With all the freedom that comes with microservices, the temptation to use them in less maintainable ways is too much. When teams adopt microservices, they sometimes run into the problems creating new services for every small functionality or requirement. This can sometimes lead to nanoservices, services that are too small to merit the overhead of running and maintaining them.

The one engineer who is obsessed with Scala has the opportunity to create a new service. When it’s easy for him to spin up a service, he creates his shiny new Scala service that performs an important but less visible function. Years later, long after he’s left the company, when this service finally needs a change, it’s written in a language that no one on a related team knows how to write.

Eventually, the accumulation of services like these that lost their owners can also result in "dead" or "orphaned" services—services that are no longer actively maintained or used but still exist. Over time, these services accumulate technical debt and contribute to architectural complexity without delivering tangible value.

Macroservices, a middle ground

As teams moved to microservices, many found that a faithful interpretation of the microservices dogma didn’t work for them, whether it was due to operational overhead or overly complex service topographies. Regularly, due to either careful planning or user error, teams started to create services that were small-ish or medium size, encompassing a few different responsibilities and data sources. In this sense, they break the strict definition of microservices, but are still independently deployed services in the cloud.

Engineers tend to like software principles that work in absolutes, so those medium-sized or “microservice-like” services don’t have a singular pedagogical name that is used consistently. These services have been called mesoservices, miniservices, macroservices, or even (technically incorrectly) microservices, as the term “microservices” is sometimes the catch-all name for cloud services that aren’t monoliths. The terms mesoservice or miniservice are often used to indicate more “medium-sized” services, in contrast to macroservices, which are for “larger-size” services. For our purposes, mostly because determining what constitutes medium versus large can be subjective and warrants a separate discussion, we’ll use the term “macroservice” for anything larger than a microservice.

Macroservices provide a level of abstraction that allows microservices to be grouped together and share state, reducing the number of service boundaries while still maintaining some degree of independence and modularity. This approach can be beneficial in certain contexts where managing a large number of fine-grained microservices becomes cumbersome, yet the advantages of microservices in terms of scalability and fault isolation are still desired. This becomes pretty popular in practice.

Uber, to address the problems that came with thousands of microservices, pivoted to a philosophy that they call “domain-oriented microservice architecture.” They group related microservices into collections of independent domains. In general, the concept of domain-oriented microservice architecture can be applied to help develop well-defined individual microservices or organize services into macroservices. Macroservices access shared data stores used by other macroservices or monolithic applications, and they grant access to multiple data objects and processes. These features reduce complexity by sharing persistent state between services.

While we’re not covering how to move to microservices from a monolith here, teams can also create larger macroservices as part of that transition process. The first step is often to divide a monolith into relatively bigger modules that are logically independent from each other, even if it’s only a couple services. Moving into just a few larger macroservices that are grouped by domain can unlock immediate benefits and sets the stage for pulling out small services later.

Is a large macroservice just a monolith with extra steps?

But wait, if we continue to group more services into one, have we come back full circle to the monolith? Not exactly.

Of course, sneaking all the services back into one giant macroservice could eventually lead to some unpleasant results. You could potentially run into some of the drawbacks of a monolith, such as slower development, lower reliability, and difficulty scaling compared to a purely microservice system. Large macroservices can act similarly to a monolith, but because the services have their own deployment lifecycle, they provide more flexibility and portability for development and scaling.

Microservice purists argue that macroservices are merely a symptom of poorly implemented microservices. In some cases, there are certainly poorly designed microservices that lead to subpar macroservices in a system. But these can be avoided when you organize with intention and in ways that follow microservice principles, like organizing the macroservices by domain. When a monolith isn’t divided up well into microservices, the problem isn’t that macroservices aren’t the right choice, but how these macro- and microservices were chosen in the first place.

What’s the right size service, then?

Ultimately, the best architecture is the one that fits the needs of the application at hand, taking into consideration what services already exist. We can follow a few general rules of thumb.

A pure microservice architecture works well in many contexts. Yet, starting a new project with a microservice architecture might not always be the best approach, even if the application is expected to grow in size and complexity. If you start with a monolith, you’ll likely get faster development initially, easier testing, and a more straightforward deployment process. As the application evolves and the team gains a better understanding of the domain and the system's requirements, it becomes easier to identify the natural boundaries and seams along which the monolith can be split into microservices, by first stepping through using macroservices.

If you’re considering changing your current architecture, understand the benefits you would gain from moving to more microservices or macroservices, and if that’s worth the tradeoffs as well as the development time. There are many ways that an existing service can be improved without going straight to reorganizing your architecture, which is tedious at best, spending development cycles on moving things around instead of working on the product. Evaluate whether the challenges you’re facing can be addressed by improving management and orchestration of the existing microservices. If you’re looking to make it easier to keep track of services and which teams own them, you might want to look into better communication or tooling, like an internal developer portal.

The best choice for an ideal architecture is not a simple, cut-and-dry answer. The right answer for most is not a flashy one, and is likely something in the middle: medium sized services and medium size code repositories.

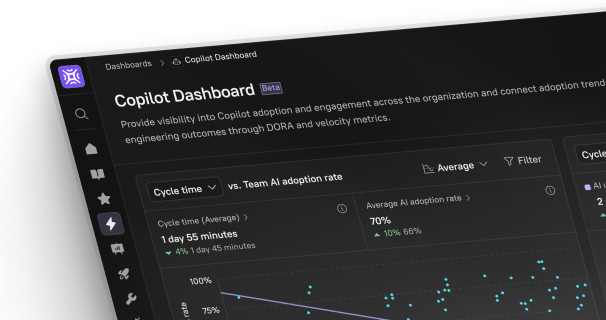

Cataloging services to avoid unwieldy or zombie microservices

Whether you have thousands of microservices like Uber had, or you’re creating new macroservices to modularize your application, your engineers need a way to keep track of all of the services in your organization. Consider using an internal developer platform (IDP) like Cortex. Abandoned APIs can be a pretty big concern for developers, but Cortex allows you to manage and keep track of all your microservices in one place. By including all your services in a service catalog, you can track the ownership, downstream dependencies, and documentation for them, to reduce the complexity of maintenance and prevent APIs and services from being left behind. Scorecards show the health of these services, aggregating metrics into a single place. An IDP like Cortex can be a game-changer for keeping your microservices and macroservices organized and up-to-date—so you can focus on building apps your users will love.

To see how you can better manage your microservices, macroservices, and everything in between, book a demo with us.